My research interests lie in collaborative learning and privacy-protection techniques and that is what I am working on as ESR at bitYoga and PhD student from the University of Stavanger.

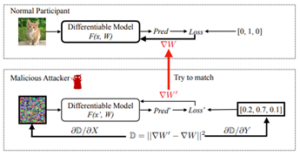

Distributed collaborative learning does not share data but shares models to protect data privacy. However, researchers now find that people can recover training data from deep learning models. These kinds of privacy attacks are referred to as reconstruction attacks. Recently, there have been works that restored training data from the gradients. In traditional deep learning, the model is trainable. Given original data and labels, the complete gradient information can be calculated through backpropagation. Gradient-matching based attacks try to recover the image by minimizing the difference between the gradient of the dummy data and the ground-truth gradient about the same model. Ideally, when the imagined image is close to the ground truth, the deviation between the gradients is close to 0. The gradient difference is calculated using l2 loss, and the optimization algorithm prefers the L-BFGS algorithm.

iDLG further discovered that the label information could be derived from the gradient information of the last fully connected layer. The method based on the gradient information of the fully connected layer does not need to rely on data recovery and has a high accuracy rate. But their approach only supports batch size = 1. InvertingGradients method found that the total variance loss can significantly improve the quality of the picture so that the reconstruction attack can restore the ImageNet size picture. The GradInversion approach proposes a method that can support the recovery of labels from the gradient information of the fully connected layer of batch data. But the batch cannot contain duplicate tags, and their method can only restore one of the duplicate tags. In addition, they propose using different initialization values to obtain the result of restoration, align images with RANSAC-flow, and use the aligned image as a regularization.

My research recently found that the complete label information can be recovered directly from the fully connected layer parameters of the batch data, regardless of whether duplicate labels exist. At the same time, we found that we can use multiple models and gradient pairs to restore the same batch of pictures. Our work also explored the possibility of recovering the training images from two consecutive model updates.

Gradient-matching based attacks remind us that we cannot ignore the privacy contained in the gradient, and the sharing of gradient scenarios (common in federated learning) requires additional privacy enhancement measures.

Figure shows the principle of gradient-matching based attacks from paper “Deep Leakage from Gradients”.

Jiahui Geng – ESR3.