Explainability plays a relevant role since it enables the resoluteness of disagreement between the algorithms and the pathologists.

The virtual secondment at Erasmus MC Rotterdam consisted of crash courses related to the characterization of bladder cancer. Every week, I would attend a course on molecular characterization of bladder cancer and on pathology in bladder cancer with an expert uropathologist from the hosting institution. Also, every week I attended a weekly lab meeting with the bladder cancer group, where once I would present my work up to now in addition to introducing artificial intelligence and image processing. This meeting served as journal club where everyone in the Department of Urology would do the same.

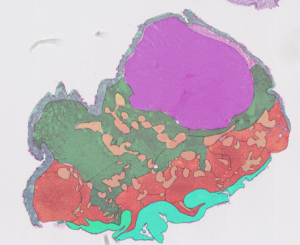

At the weekly department meeting, several talks from group members introduced all aspects related to bladder cancer, from early detection up to follow-up treatment. As for the crash course on molecular characterization of non-muscle invasive bladder cancer (NMIBC), it consisted of several private meetings with an expert uropathologist, where we would analyse histopathological slides of NMIBC. It is recommended to know the data you are working with, and this was a great opportunity to do so. Working closely with a trained uropathologist gave me great insight for better assessing samples myself. For this reason alone, I started to annotate some of the slides and later I would bring these annotations to our meeting so we could discuss and evaluate them. Annotating tissue types was generally a simple task since haematoxylin and eosin (H&E) staining accentuates different colour among different tissues. An exception would be cancerous invasive areas, or urothelium invading lamina propria, where it is much more difficult to distinguish the border (if there is any) between layers. Also, bladder cancer is a heterogeneous disease presenting a wide range of different scenarios that could be present in each patient, hence the assessment of a case remains highly subjective.

Also, as a seconded collaborator, I presented my current line of work for grading non-muscle invasive bladder cancer whole slide images according to the World Health Organization 2004 grading system, as well as briefly introducing concepts of artificial intelligence and image processing. Although my current project is a work in progress, the members were intrigued by the processing of medical data and were keen on adopting artificial intelligence algorithms to process their data and find underlying patterns not visible to the naked eye. Although those systems have provided outstanding results relentlessly, it is genuinely impossible to craft a system without any errors. Errors could be of random nature, hence predicting certain samples as false positives or negatives, or induced as a source of bias. Bias is generated for limiting amounts of training data that do not fully represent the desired population, which results in systematic errors. Even in hypothetical scenario where your dataset is completely heterogeneous, there would still be traces of bias, which makes erroneous predictions unavoidable. For this reason alone, explainability plays a relevant role since it enables the resoluteness of disagreement between the algorithms and the pathologists, independently who’s judgement was incorrect. In this way, explainability can aid pathologists in evaluating the predictions provided by a system that was originally taught based on their expertise.

Further understanding of the clinical aspects and challenges that happen during routinary laboratory practice helps on developing and fitting better algorithms that truly represent an expert pathologist’s behaviour. Inter-disciplinary secondments like this one bring together medical and engineering for a closer and profitable collaboration. Artificial intelligence in pathology poses challenges to engineers and pathologists as it requires a solid foundation in its clinical decision making. Explainable algorithms present themselves as a necessary requirement to fulfil the gaps these challenges raise in a reproducible and reliable manner that is compatible with laboratory practices. Omitting the introduction of explainability into computational pathology for clinical decision support systems leads to ethical conflicts from medical values and consequently to individual’s healthcare. Extensive work is yet needed to sensitize engineers and pathologists alike to collaborate to engage in the challenges and limitations of transitioning from opaque to transparent algorithms. Explainability becomes an ethical prerequisite for the installation of digital pathology with artificial intelligence assisted tools.

Saul Fuster Navarro – ESR5.